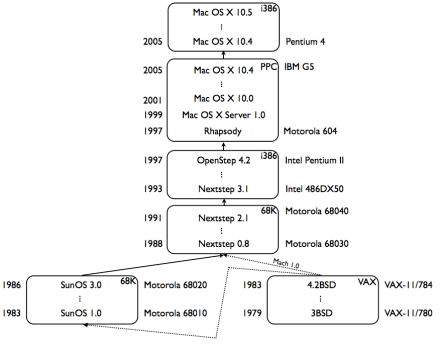

The History of OS Migration

Operating system vendors face this problem once or twice a decade: They need to migrate their user base from their old operating system to their very different new one, or they need to switch from one CPU architecture to another one, and they want to enable users to run old applications unmodified, and help developers port their applications to the new OS. Let us look at how this has been done in the last 3 decades, looking at DOS/Windows, Macintosh, Amiga and Palm.